I’ve always wanted to be an architect. Architects for me had superpowers. Imagine putting your thoughts, ideas and dreams onto paper, and watch them unfold from nothing. I carried forward this fascination to graphic design, where I spent hours painstakingly going through pixel by pixel, until a design was ready to be put on paper. I finally settled with data visualization, where I tried to make sense of seemingly random bits of data, to finally exhibit these insights in front of a captive audiance. Until now.

I recently came across Stable diffusion, a ground breaking machine learning model developed and released by StabilityAI. It is a latent diffusion text to image model trained on images from the LAION-5B database. There are a TON of exciting things about the model itself, and more about the circumstances around making it open sourse, competition, and the ethics perspective.

But before I talk about all of this, I just got it setup on my M2 Macbook Air, and want to take it for a spin! Here goes.. \

Shit. Rick Rolled.

So there’s an inbuilt mechanism that detects if the text you’ve passed is NSFW, after which it returns this image. I was pretty surprised when a couple of my harmless prompts like ‘firemen burning books’ (Yes I’m in a Ray Bradbury phase right now) got flagged, but I was able to reword them and get past this filter. \

A sofa in the shape of an avacado.

I also got Dall-E access recently, so I thought I’d try pitching SD against DALL-E on some of their famous examples. Somehow, Dall-E images were a lot more refined? \

A fox drinking coffee in a cafe. \

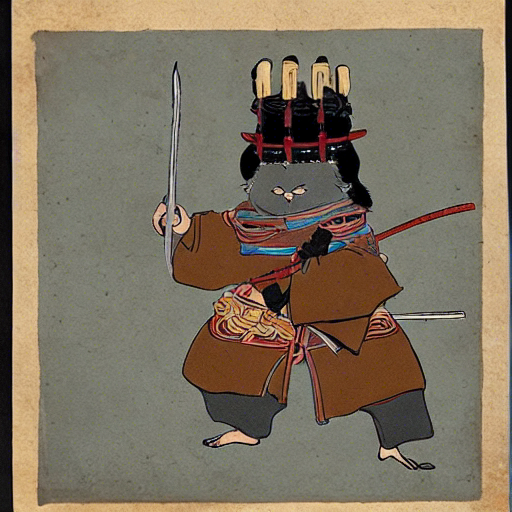

Samurai hamster with a sword fighting in front of a castle. \

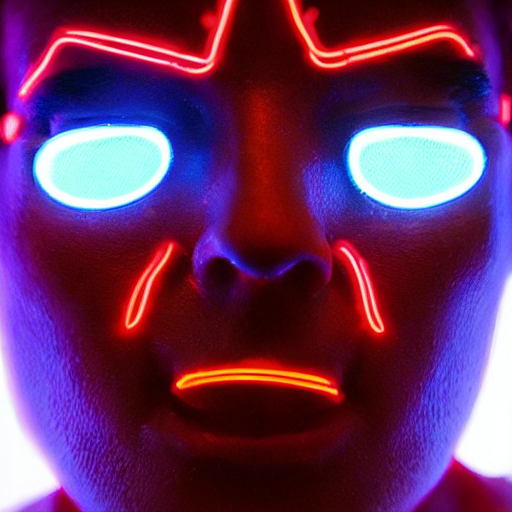

Neon lights on a robot face. \

Spongebob fighting godzilla. \

Ray Bradbury. \

Farenheit 451.

The Good

What’s so great about this anyway?

We live in magical times! While generative art has been here for a long time, never before has been a tool to create mind blowing art been so easily available. And Free. We’ve had other tools like SD in the past couple of years, and they had two primary problems.

- The models are expensive to train, and even harder to make sense of. You need a team of data scientists, machine learning engineers to work together, which requires extrordinary amounts of time and resources.

- If a company decides to invest these resources, model weights and training techniques are not released publicly, but hidden behind expensive APIs.

The Bad

Umm.. Shit. Remember Deepfakes?

Did we just give bad actors the power to create unlimited realistic imagery, which coupled with models which create pages of realistic text on demand can create havoc on an unprecedented level.

The Ugly

We’re in uncharted territory now.

Where does the law stand on this? Nowhere.